Intelligent IoT and Fog Computing Trends

Intelligent IoT and Fog Computing Trends

- Last Updated: December 2, 2024

Frank Lee

- Last Updated: December 2, 2024

IoT cloud computing platforms review

IoT powered by PaaS and SaaS

Cloud based Platform As a Service (PaaS) and Software As a Service (SaaS) technologies have been used in enterprise applications for many years. In recent years, PaaS and SaaS are also the major driving forces of IoT market in a wide range of products.PaaS providers offer ready-to-use platform services like security, data storage, device management and big data analysis. SaaS providers deliver application level services like billing, software management and visualization tools. Google IoT Core, Microsoft Azure and AWS IoT are examples of PaaS/SaaS platforms.

Advantages of PaaS and SaaS

PaaS/SaaS platforms are important for the success of many small IoT startup companies.- Product companies can develop and deploy applications quickly. What used to take months can be shortened to weeks.

- Companies can scale as needed, with less startup cost, while they are validating new products in new markets.

- Companies no longer need to maintain their own data center. Those platforms usually provide better reliability and up time than individual product companies can achieve, meanwhile reducing operation overheads.

Disadvantages of Pure Cloud Service Model

In a pure cloud-centric model, all raw data are aggregated and streamed to the cloud for storage and processing. Despite the advantages, the model has some major drawbacks:- Unpredictable response time from cloud server to endpoints

- Unreliable cloud connections can bring down the service

- Excessive data can overburden infrastructure

- Privacy issues when sensitive customer data are stored in the cloud

- Difficulties in scaling to ever increasing number of sensors and actuators

Overview of Fog Computing

Industrial Internet of Things

Fog computing, sometimes also known as edge computing, has mainly been adopted in the Industrial Internet of Things (IIoT) space. The design uses local computing nodes, between the endpoints (e.g. sensors, cameras, etc) and cloud data centers, to gather, store and process data instead of using a remote cloud data center.Connected devices send data and receive instructions to and from a nearby node, usually installed on premises. The node could be a gateway device, such as a switch or router, that has the extra processing and storage capabilities. It can receive, process and react in real time to the incoming data.

Standardization

As the applications and vendors of fog computing grow, data and interface compatibility becomes an issue. The lack of interoperability between products in the industry will hinder the adoption of the technology.OpenFog consortium was founded in 2015. It is backed by companies like Cisco, ARM, Dell and Microsoft. They are driving standards and best practices in Fog Computing system design (Figure 1). Their goal is to facilitate adoption of cross industry standards and frameworks.

[caption id="attachment_5235" align="aligncenter" width="2000"]

Growing Fog Computing Applications

Mission Critical Applications

In addition to IIoT, consumer facing IoT applications also become more sophisticated and mission critical. In the first wave of consumer IoT applications, the industry and consumers explore interesting Applications and hypes. They are often less demanding (e.g. changing the color of light bulbs).However, as the IoT market matures, IoT will become the backbone of infrastructures that support important activities in people’s daily lives. The status quo is not enough. Reliability and real-time response will be essential.

Automated Driving System (ADS) is one of these examples. ADS employs multiple advanced technologies: multi-modal sensors, computer vision, artificial intelligence and machine learning, etc. The system performs data fusion, image analysis, mapping and predictions to determine the best action and controls for the drive-train.

This all needs to be done reliably in milliseconds without interruption.The data bandwidth and latency requirements mandate a powerful processing node in the car, with built-in redundancy.

Intelligent IoT Applications

Besides ADS, artificial intelligence and computer vision applications will also lead to increased demand for fog computing. An intelligent IoT system does not just collect and analyze data for human consumption alone. It needs to respond to situations without human intervention.To achieve that, it performs real-time AI inference, using data from a large number of sensors. It then sends commands to actuators in machines, drones or robots to carry out actions. In an unsupervised setting, the AI engine also collects the real-time results to evaluate the next actions to take.

We need a hybrid fog/cloud model, where edge processing nodes handle time sensitive computer vision and AI interference tasks. In addition, cloud nodes handle non-real-time or soft real-time functions like software update, contextual information collection and long term big data analysis

GPU - The Dominating Machine Learning Platform

The state-of-the-art artificial intelligence systems uses technologies like Deep Neural Network (DNN). Most of the best DNNs have deep network structures (many layers of nonlinear processing units) to achieve higher accuracy.Thus, the implementations usually demand a high volume of data movement and a large number of compute units. Machine learning and AI researchers turn to Graphics Processing Units (GPU), that were built primarily for gaming platforms.

Since 2007, Nvidia has developed Compute Unified Device Architecture (CUDA) technology to exploit the power of its graphics chips in compute problems besides 3D shader processing. GPU by design has high data throughput and large number of processing cores. That is very suitable for compute intensive problems like linear algebra, signal processing and machine learning.

The CUDA programming API allows research scientists in many domains, including AI and machine learning, to more easily program and leverage the power of GPU. The availability and continuous improvements of GPU systems in the consumer market make it possible for AI researchers to train and validate designs in a reasonable amount of time and budget. Fast forward to present, Nvidia’s CUDA platform more or less dominates the machine learning and AI market.

Embedded AI on Edge

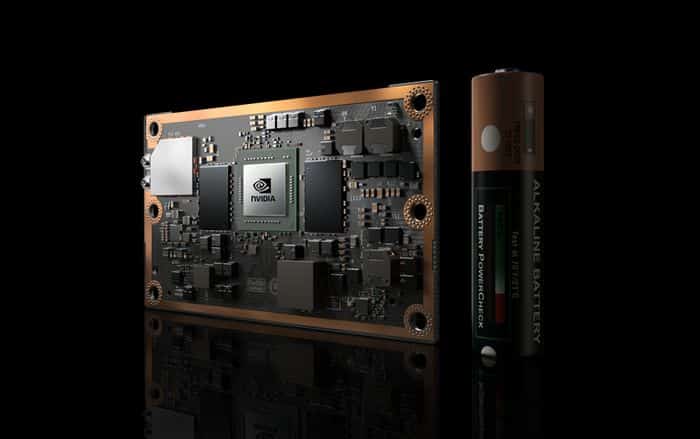

However, for embedded or mobile systems, typical GPUs are way too expensive and power hungry. In the last few years, companies like Nvidia, Intel, ARM and Apple are putting a lot of effort towards embedded AI system designs. Nvidia’s powerful Tegra processor with CUDA technology is currently the market leader. Their Jetson platform (Figure 2) has been widely used in areas like smart drones and ADS.[caption id="attachment_5236" align="aligncenter" width="700"]

Intel is also actively investing in similar embedded AI technologies, like their recent acquisition of computer vision chip company Movidius. Qualcomm, Mediatek, Huawei, AMD and some startups are also eyeing the rapidly growing market. They are developing neural network capabilities into their future System On Chip (SOC).

These technologies will find their way into market the next few years. Chip vendors are also working closely with software developers to optimize implementations on their processors.

Furthermore, embedded software developers are also looking to optimize neural network architectures that strike the right balance between complexity and accuracy requirements. The requirements are usually very different for different applications and Applications.

One example is face recognition, where the out-of-the-box accuracy and real-time requirements are very different to access control system versus photo tagging applications. The difference could lead to orders of magnitude difference in processing requirements, and thus the system cost.

Conclusions

For the next generation of intelligent IoT systems, fog/cloud hybrid architecture will be the trend. In fact, big cloud service providers are starting to push into fog computing spaces (The Big Three Make a Play for the Fog). They provide important standards, like OpenFog Consortium, and ecosystems for IoT fog products to thrive. In designing intelligent fog computing nodes or endpoints, we will see an increasing number of embedded processing platform choices in a few years.In addition, engineers need to employ domain specific algorithms and neural network designs to deliver products within budget in short time-to-market and meet usage requirements. We will explore some of the application domains in future posts.

The Most Comprehensive IoT Newsletter for Enterprises

Showcasing the highest-quality content, resources, news, and insights from the world of the Internet of Things. Subscribe to remain informed and up-to-date.

New Podcast Episode

How Drones and Telecom Enable Aerial IoT

Related Articles