16 Questions About Self-Driving Cars, CES Highlights from the Show Floor, and Solving Social Problems with Machine Learning

16 Questions About Self-Driving Cars, CES Highlights from the Show Floor, and Solving Social Problems with Machine Learning

- Last Updated: December 2, 2024

Yitaek Hwang

- Last Updated: December 2, 2024

16 Questions About Self-Driving Cars

The following presentation is from Frank Chen of Andreessen Horowitz: “16 Questions About Self-Driving Cars.” The 30 min talk covers the common technical, business, and social questions related to self-driving cars. Inspired by Artur Kiulian’s take on this talk, we have added some of our thoughts to Frank’s presentation. For more of the a16z summit series, check out Last Week in the Future V14.0: “Mobile is Eating the World.”

Level-by-Level or Straight to Level 5?

The levels here refer to the categorization scheme put forth by the Society of Automotive Engineers. Level 0 is zero-automation; level 5 is full automation. Car manufacturers will prefer level-by-level approach to autonomous cars, adding features to improve automated features. Tech giants in the valley (Google, Uber, Tesla), on the other hand, will benefit by jumping straight to level 5 to avoid UX problems associated with partial or conditional automation. Google’s report on autonomous car crashes reveals that most of the accidents are caused by human error as drivers are unaccustomed to non-human-like behavior in adverse conditions.

Image Credit: Frank Chen

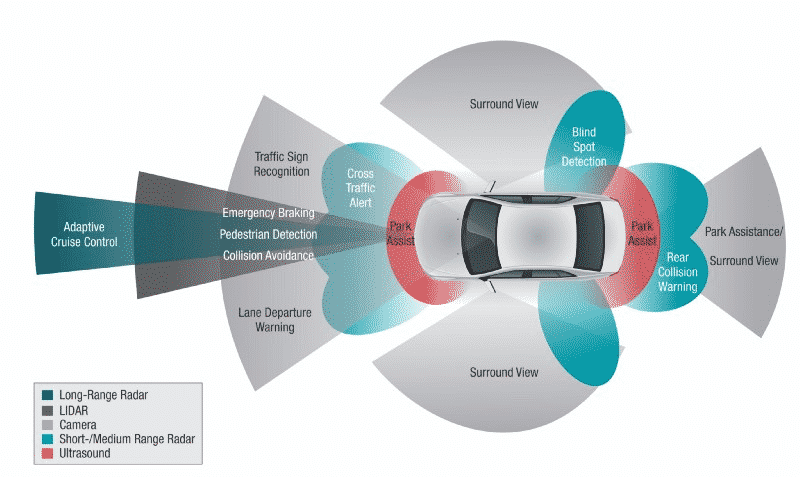

LiDAR Sensors or Cameras?

LiDAR sensors, the bucket-shaped device on top of self-driving cars, provide a 3D laser map. LiDAR sensors are costly (~$7500, although Waymo has just announced 90% cost reduction), and some argue that stereo camera can capture the same information by computing 3D space. The advantage of LIDAR sensors are extra resolution and accuracy to ease the fear of self-driving cars.

Image Credit: Frank Chen

Other Technical Questions

Will map providers add features to help self-driving cars detect obstacles or will it be the responsibility of supercomputers embedded in autonomous cars? Will end-to-end training of deep learning models be sufficient or will we still blend other software techniques fine-tuned by programmers? Lastly, how much real-world testing is required and is virtual world testing enough? Frank doesn’t provide answers to these questions, but lays out the important technical questions to pay attention to.

Image Credit: Frank Chen

Business Questions

While most people assume that the autonomous vehicle race is between the car manufacturers and the silicon valley giants, Frank highlights Chinese manufacturers as the up-and-coming contenders. He also considers the possibility of car companies transforming into Boeing-Airbus equivalents in the car industry, selling autonomous vehicles to fleet managers and service providers (e.g. Uber, Lyft). Lastly, Frank ponders the implications of self-driving cars on insurance.

Image Credit: Frank Chen

Social Questions

Frank quotes Carl Sagan, “It was easy to predict mass car ownership but hard to predict Walmart,” to describe the unforeseen ramifications of self-driving cars. How will commuting change? When will it become illegal to drive? And how will cities change? As discussed often with the rise of AI, policy and technology must work together. It’s unclear when and how self-driving cars will shape the future, but keep these questions in mind in preparing for a truly transformative technology.

Image Credit: Frank Chen

CES Highlights from the Show Floor

Aside from the dominance of Alexa (see LWITF V16.0), CES 2017 was labeled a disappointment by many. Steven Sinofsky, who has 26 CES shows behind him, gave a different take. He noted that CES 2017 was “one of lots of new, cool, and useful but not any firsts.” Calling the theme the “Year of Product Execution,” Steven laid out his observations from the show floor in his lengthy post “A Very Producty #CES2017.” Here we picked out some of his important takeaways by category.

Image Credit: www.ces.tech

Thoughts For Product Managers

“Software is the limiting factor, 100% of the time. As a corollary, a product that is not differentiated by software, arguably is not differentiated at all.”

Last week, we examined Ben Thompson’s take on operating systems in determining the success of Alexa. Mac OS X played a crucial role in differentiating Apple’s sleek hardware and making Apple the most valuable company in the world. This message also resonated with Steven as he looked at all the new products at CES.

“At planetary scale, the whole matters more than parts.”

Although he didn’t specifically mention this, this message rings especially true in IoT. Industry wide fragmentation continues to plague customer experience. Breakthrough products, including IoT applications, will be from companies who understand that “the whole and the seams between the breadth of partners required for a product” are more important than its individual components.

Voice and Cast

“By far the broad penetration, demonstration, and use of Alexa was the ‘big’ industry news. While nowhere near as big, the rise of every screen as a casting receiver is a second example of a new ‘runtime’ making its way broadly into the full range of products.”

Buried in the Alexa hype was the rise of Chromecast. Steven doesn’t want to see an app platform for every streaming service. TVs exist in a fragmented global market and managing high quality apps for all of them will be extremely difficult. Chromecast and AppleTV helps mitigate this issue. We will increasingly see more devices with native support for either of the services.

IoT

“At CES this year, there was little new in a broad array of sensors and automation to report. What was visible though was a maturing and improved productization.”

For many, IoT hasn’t delivered on its potential yet. It’s perceived to be a luxury or a combination of useless devices (I don’t blame them for some of these dumb smart devices). However, Steven compares these early struggles to the likes of our frustration at early digital cameras, PCs, and the internet. We will transition into luxuries becoming necessities as we did with smart phones. Steven saw more complete systems for IoT applications such as home security and has high hopes for continual improvement.

VR/AR

“The raging debate over VR ‘versus’ AR will continue. For me, the far more interesting question will be who/how these technologies break out to go beyond gaming/entertainment.”

Pokemon Go played a pivotal role in gathering mass interest in VR and AR. We are still in the early stages of innovation, and the immediate focus is on gaming and entertainment. I am with Steven on this one. We saw the gaming industry transform GPUs and in turn helped machine learning take off. How will VR and AR benefit from gaming and entertainment?

Quote of the Week

“For algorithms to add value, we need people to actually use them; that is, to pay attention to them in at least some cases. It is often claimed that in order for people to be willing to use an algorithm, they need to be able to really understand how it works. Maybe. But how many of us know how our cars work, or our iPhones, or pace-makers? How many of us would trade performance for understandability in our own lives by, say, giving up our current automobile with its mystifying internal combustion engine for Fred Flintstone’s car?

The flip side is that policymakers need to know when they should override the algorithm. For people to know when to override, they need to understand their comparative advantage over the algorithm — and vice versa. The algorithm can look at millions of cases from the past and tell us what happens, on average. But often it’s only the human who can see the extenuating circumstance in a given case, since it may be based on factors not captured in the data on which the algorithm was trained. As with any new task, people will be bad at this in the beginning. While they should get better over time, there would be great social value in understanding more about how to accelerate this learning curve.”

By Jon Kelinberg, Jens Ludwig, and Sendhil Mullainathan

Image credit: HBR

Silicon Valley — and tech industry in general — is often criticized for not solving the “big” problems. Last year, Adam Elkus wrote in his essay, “The Manhattan Project Fallacy,” addressing some of the criticisms. But it turns out, some of the brightest minds are now solving the “big” social problems with machine learning. In Harvard Business Review, Professor Jon Kleinberg described his project of applying machine learning to bond court cases. He noted that data could be used to improve on judges’ intuition, leading to lower incarceration rates.

The article does a great job addressing the widespread skepticism caused by overstated claims of machine learning solutions. Social problems are more complicated than programmatic tasks: a computer is good at finding patterns and recognizing images, but how effective are they in reasoning policy outcomes? Professor Kleinberg reflects on his experience and provides some helpful advice:

- Look for policy problems that hinge on prediction.

- Make sure you’re comfortable with the outcome you’re predicting.

- Check for bias.

- Verify your algorithm in an experiment on data it hasn’t seen.

- Remember there’s still a lot we don’t know.

I want to focus our attention on the last point. Machine learning is still a relatively new technology (at least the applications of it). This, however, should not deter us from using this wonderful technology. We know that we need to somehow combine human judgement and algorithmic decisions. But to move forward, we need to actually use the algorithms. As he draws a parallel to our ignorance of the underlying technology behind electric cars and iPhones, we don’t necessarily need to understand how the algorithm works to apply it. But on the flip side, we NEED to know when to override those decisions, since latent bias in the data will drive biased outcomes.

Rundown & Resources

- Train AI agents in Grand Theft Auto V — OpenAI

- Master web development with these 9985 tricks — knowitall.io

- Word2vec explanation with visualization — Pitor Migdal

- 8 data trends on the radar — O’Reilly

- AI Forecasts (Past, Present, and Future) — Miles Brundage

- Baidu’s Little Fish could be China’s Echo — Verge

The Most Comprehensive IoT Newsletter for Enterprises

Showcasing the highest-quality content, resources, news, and insights from the world of the Internet of Things. Subscribe to remain informed and up-to-date.

New Podcast Episode

How Drones and Telecom Enable Aerial IoT

Related Articles