The 4 Computing Types for the Internet of Things

The 4 Computing Types for the Internet of Things

- Last Updated: December 2, 2024

Parikshit Joshi

- Last Updated: December 2, 2024

From a practitioner's point of view, I routinely see the need for computing to be more available and distributed. When I started integrating IoT with OT and IT systems, the first problem I faced was the sheer amount of data that devices sent to our servers. I was working in a plant automation scenario where we had 400 sensors integrated, and these sensors were sending 3 data points after every 1 second.

The Headache with Data

You've probably heard about this before, but most of the sensory data produced is completely useless after the 5 seconds it was generated. Now you see my point?

We had 400 sensors, multiple gateways, multiple processes, and multiple systems that needed to process this data almost instantaneously.

At that time, most proponents of data processing were advocating for the Cloud model, where you should always send something to the cloud. That’s the first type of IoT computing foundation as well.

1. Cloud Computing for IoT

With IoT and Cloud computing models, you basically push and process your sensory data in the cloud. You have an ingestion module that takes in data and stores it in a data lake (a really large storage) and then you apply parallel processing over it (it could be Spark, Azure HD Insight, Hive, etc.) and then consume this fast paced information to make decisions.

Since I started building IoT solutions, we now have many new products and services that could make this extremely easy for you:

- If you are an AWS fanboy, you could utilize AWS Kinesis and Big data lambda services.

- You could utilize Azure’s ecosystem that makes building big data capabilities extremely easy as well.

- Or, you could use Google Cloud Products with tools like Cloud IoT Core.

Some challenges that I faced with cloud computing in IoT are:

- Use of proprietary platforms and enterprises being uncomfortable with having their data on Google, Microsoft, Amazon’s properties

- Latency and network disruption issues

- Increased storage costs, data security and persistence

- Often big data frameworks aren’t enough to create a large ingestion module that can facilitate data needs

But you have to process your data somewhere, right?

Now comes fog computing!

2. Fog Computing for IoT

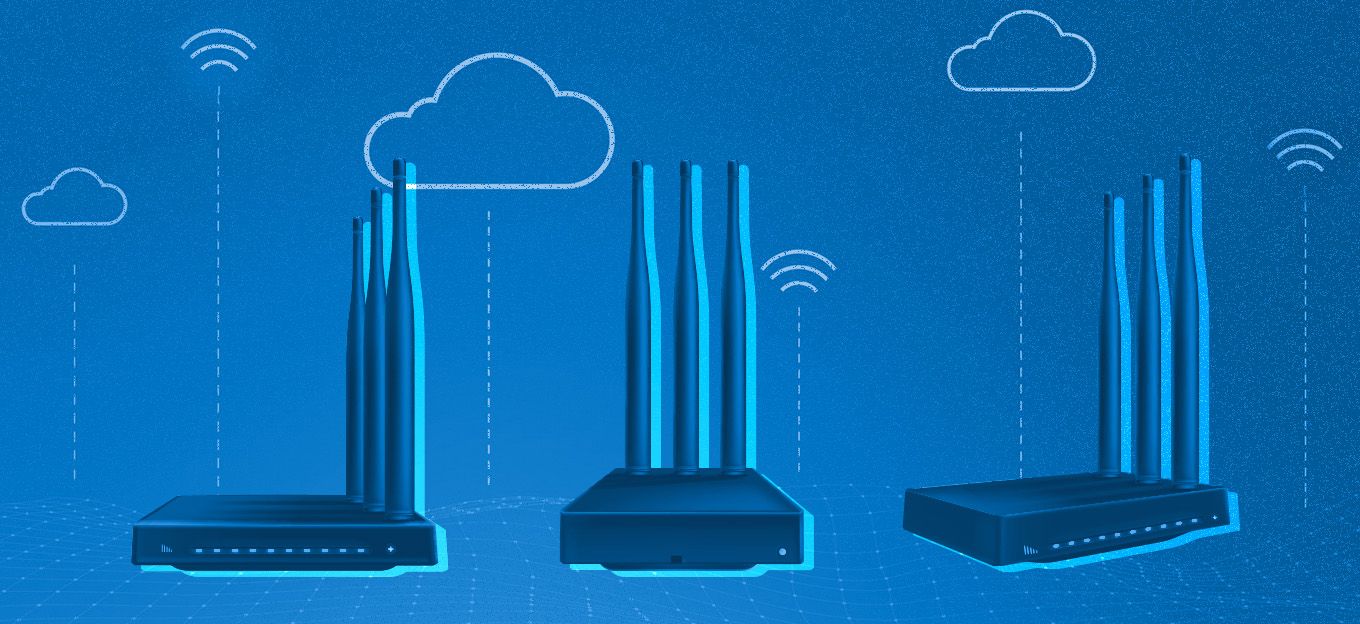

With fog computing, we became a bit more powerful. Rather than sending your data all the way to cloud and waiting for the server to process and respond, we now use a local processing unit or a computer.

4-5 years back when we implemented this, we didn’t have wireless solutions like Sigfox and LoraWAN, neither BLE had mesh or long range capabilities. So, we had to use costlier networking solutions to make sure that we could build a secure, persistent connection to the data processing unit. This central unit was the core of our solutions and there were very few specialized providers of such solutions.

My first implementation of fog computing was on an oil and gas pipeline project. This pipeline generated terabytes of data, we created a fog network that had fog nodes in place to compute the data.

[bctt tweet="With fog computing, we became a bit more powerful. " username="iotforall"]

Something I learned from implementing a fog network from that time:

- It isn’t very straightforward, there are many things you would need to know and understand. Building software, or what we do in IoT, is more straightforward and open. Also, when you place networking as a barrier it slows you down.

- You need a very large team and multiple providers for such implementations. Often you will face vendor lock-in as well.

Open Fog and Its Impact on Fog Computing

A year ago a colleague introduced me to OpenFog, an Open Fog computing framework for fog computing architecture developed by leading practitioners. It provides:

- Applications

- Test beds

- Technical specifications

- And a reference architecture as well

3. Edge Computing for IoT

IoT is about capturing micro-interactions and responding as fast as you can. Edge computing brings us closest to the data source and allows us to apply machine learning at the sensor’s region. If you got caught up with the edge vs fog computing discussions, you should understand that edge computing is all about intelligence at the sensor nodes, whereas fog computing is still about local area networks that can provide computing power for data heavy operations.

Industry giants like Microsoft and Amazon have release Azure IoT Edge and AWS Green Gas for facilitating machine intelligence on IoT gateways and sensor nodes that have decent computing power. While these are great solutions that makes your work very easy, it significantly alters the meaning of edge computing that we practitioners know and use.

Edge computing shouldn’t require machine learning algorithms to run on gateways to build intelligence. In 2015, I came across Knowm and saw their impressive work on Neuromemristive processors. Alex of Knowm spoke about the working of Embedded AI on neuromemristive processors on ECI conference:

[embed]https://www.youtube.com/embed/LHDT6fo9n3U[/embed]

The real edge computing will happen over such neuromemristic devices that can be preloaded with a machine learning algorithm in them to serve a single purpose and responsibility. Would that be great? Let’s say your warehouse’s end node can perform NLP locally for a very few key strings that make a password like “Open Sesame”!

Such edge devices usually have a neural network-like construct within them, so when you load a machine learning algorithm you basically burn a neural network inside of them. But this burn would be permanent, you can not reverse this.

There’s a whole new space of embedded devices that facilitate embedded edge intelligence on low powered sensor nodes.

Let’s now look at the fourth computing type for IoT - MIST computing.

4. MIST Computing for IoT

We saw that we could do the following to facilitate data processing and intelligence for IoT:

- Cloud based compute models

- Fog based compute models

- Edge computing models

Here’s a computing type that complements fog and edge computing and makes them even better without requiring us to wait for another decade. We could simply bring in the networking capabilities of IoT devices and distribute the workload and leverage dynamic intelligence models that neither fog nor edge computing provides.

Setting up this new paradigm could bring in high speed data processing and intelligence extraction from devices that have a 256kb memory size and ~100kb/second data transfer rate.

I won’t say that this technology model is mature enough to help us with IoT compute models. But with Mesh networks we definitely see a facilitator for such a compute model.

Personally speaking, I have spent some time implementing MIST based PoCs in our labs, and the challenge we are trying to solve is the distributed computing model and it’s governance. But, I am 100% sure that with 6 months someone will come up with a better MIST based model that we could all easily use and consume.

IoT is fascinating and challenging at the same time, and what I write is mostly from parts of my own experience. If you have something to share, add, or critique, I am all ears!

The Most Comprehensive IoT Newsletter for Enterprises

Showcasing the highest-quality content, resources, news, and insights from the world of the Internet of Things. Subscribe to remain informed and up-to-date.

New Podcast Episode

IoT in 2026: Trends and Predictions

Related Articles