6 Ways AI Could Lead to Global Catastrophe

6 Ways AI Could Lead to Global Catastrophe

- Last Updated: December 2, 2024

Guest Writer

- Last Updated: December 2, 2024

Culturally, humanity is fascinated by the prospect of machines developing to the point of our destruction. Whether exploring threats from The Terminator, the Matrix, or even older films such as War Games, this type of story enthralls us. It’s not simply technology that fascinates us; what’s compelling is the prospect of technology drastically changing our every-day lives.

Our stories, however, are rooted in reality. As artificial intelligence (AI) is refined over the coming years and decades, the threats may not merely be stories. There are many potential ways in which artificial intelligence might come to threaten other intelligent life. Here are 6 realistically possible ways that AI could lead to global catastrophe.

1. Packaging Weaponized AI into Viruses

The world has seen several high-profile cyber-attacks over recent years. Simpler tactics such as Denial of Service attacks have escalated into high profile hacks (such as Experian) and even into ransomware attacks. In parallel, the number of interconnected devices and platforms has exploded with the advent of the Internet of Things and increased accessibility to consumer mobile products.

This potential catastrophic use of AI is a natural extension of many typical tactics employed by cyber attackers today. Viruses have proven effective in engendering disarray in incidents such as the hacking of an Iranian nuclear power plant in 2010. While this was catastrophic for a single country, it proved to be a model for attacking critical infrastructure with dangerous consequences. Soon, viruses may be modified with artificial intelligence to make them even more effective, with consequences we may not be able to imagine. Would viruses be able to transform on their own? Is it possible to leverage AI models to adapt code to new languages and environments rapidly? There are endless possibilities for AI to be used to enhance already effective attacks. As we depend more on interconnected systems and automation to control more of the systems underlying normal life, the threat of these ‘smart viruses’ becomes greater.

2. Failure of Nuclear Deterrents

Because of the speed and consequences of nuclear weapons, countries building nuclear armaments have also invested in automating sophisticated weapons systems. Ironically, this automation and programmed logic we rely on has led to most of the nuclear ‘close calls’ the world has seen.

In a future where governments and strategists may rely on artificial intelligence to make even more decisions, special attention will be needed to avoid logical conclusions such as mapping plans to ‘win’ relying on pre-emptive strikes. Without caution, nuclear detection and deterrence systems could provoke war rather than protect it.

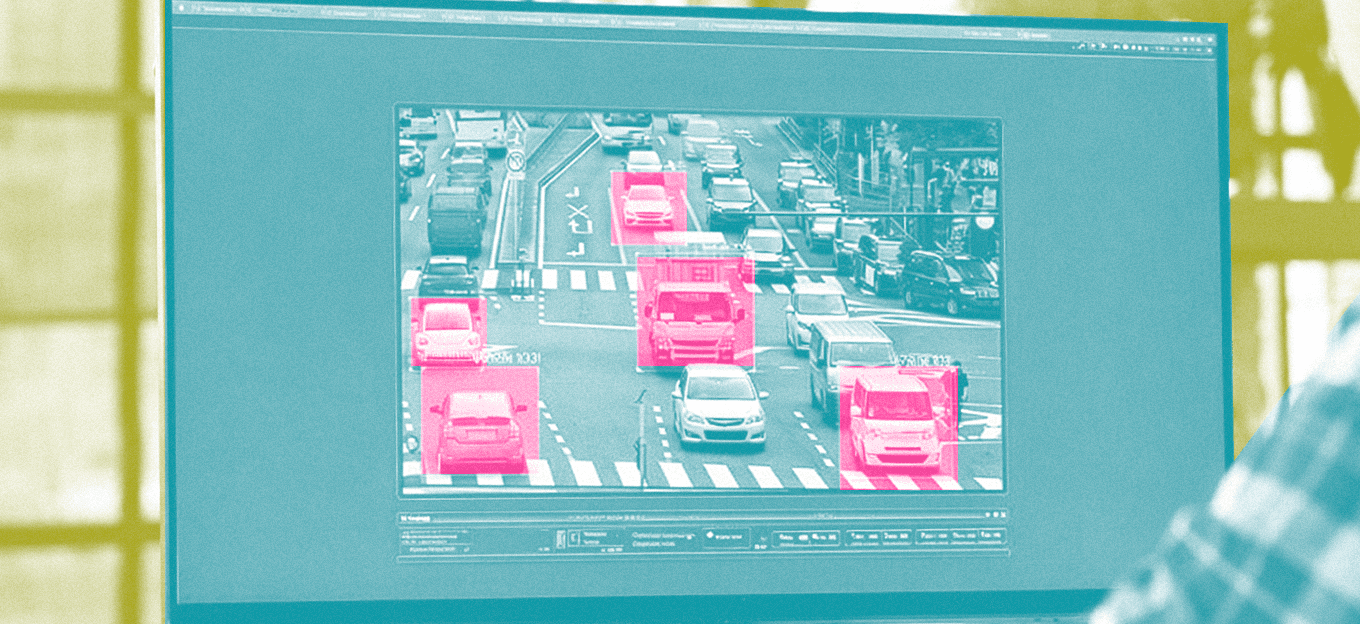

3. AI as an Influencer for Destabilization

Sticking to the arc of near-and-likely paths, many non-virus uses of artificial intelligence could affect the course of humanity soon. In some cases, this could manifest as a political subterfuge that destabilizes democratic processes and destabilizes nations. Simple versions of this approach have been noted in Western countries' election cycles in the past several years.

Layering artificial intelligence onto botnets and other simple media attacks will make them more effective. Societies must consider how the coordinated, artificially intelligent spread of misinformation can be slowed and stopped. Further, big tech, governments, and populations must find ways to identify and eliminate these more subtle uses of AI to sow discord and cripple people's stable progress around the world.

4. Human Experience Disappears After We All Upload

Jumping from clear, near-term threats to the first of those anticipating significantly advanced AI, we focus on the possibility of human immortality enabled by upload to advanced AI environments.

Many researchers are already looking for ways to emulate and advance nature’s designs in computing. A subset of these is even on the journey to find ways to immortalize themselves in advanced computers. What if those innovators get far enough to be able to hold their collected experiences and models of thinking into computers?

One potential threat here is that artificial intelligence allows the human race to immortalize itself via ‘upload.’ Still, AI itself doesn’t develop far enough to allow a full range of human experience and continued growth. By trying to gain immortality, we might give up the human experience as we know it.

5. “Narrow” Superintelligence Gains Strategic Control

Many of these scenarios sound like the plots of movies — the last of the scenarios before examining superhuman intelligence focuses on what a specialized AI actor could accomplish when pursuing strategic advantages without human control.

With the ascendance of ‘superintelligence’ on the horizon, humanity must remain thoughtful of how an intelligence many orders of magnitude beyond our own may be able to assert power over the real world.

Yet, experts observe that an AI need not go super intelligent to exert dominance that subordinates nations or the entire world. In this scenario, a strategic advantage isn’t digital by necessity. A specialized AI could lead to dramatic, overwhelming strategic advantages in nanotech or biotech domains that imbalances a world order.

6. Our Ability to Create “Branches” for More Advanced Logic Outpaces Our Ability to Ensure Quality

The very first website ever created is less than thirty years old. In the time since it was first published, the use of the internet has changed humanity. With each new line of code used online and off, there can be problems. For many well-known software companies, quality assurance - or testing- is a foundational step in creating or refining anything they put into the world.

But as code gets more complicated, and as we ask the software to do more for us, more opportunities for faults arise. One final way we may see AI lead to catastrophe lies in our practices and use of it. While society has benefited immeasurably from advances in automation, resource management, and the engagement that technology enables, there is a cost in due diligence to ensure the integrity of the built software.

Without proper planning, artificial intelligence built for convenience or improvement in specific domains could prove faulty. As code grows exponentially to execute the tasks set for AI, more and more opportunities for bugs arise. Without proper planning and quality assurance, the failure could be our own — not introducing rigor into the process of releasing new functionality ‘into the wild.’

The story of humanity is one of resilience and resourcefulness. To that end, artificial intelligence is a product of our collected ingenuity. Our ever-increasing reliance on technology also requires us to be vigilant against its misuse. With preparation and luck, these potential catastrophes may not come to pass. The threats, however, are real and numerous.

The Most Comprehensive IoT Newsletter for Enterprises

Showcasing the highest-quality content, resources, news, and insights from the world of the Internet of Things. Subscribe to remain informed and up-to-date.

New Podcast Episode

IoT in 2026: Trends and Predictions

Related Articles