Alexa (Amazon’s OS) Dominates at CES 2017, Neural Architecture Search with Reinforcement Learning & 30 Best Pieces of Advice for Entrepreneurs

Alexa (Amazon’s OS) Dominates at CES 2017, Neural Architecture Search with Reinforcement Learning & 30 Best Pieces of Advice for Entrepreneurs

- Last Updated: December 2, 2024

Yitaek Hwang

- Last Updated: December 2, 2024

Alexa, let’s take over CES 2017

Amazon sold millions of Alexa-enabled devices this past holiday season — in fact, 9x more than last year — and many pundits declared that Alexa “stole the show” at CES 2017. Alexa was indeed all over the place at CES: it was in LG’s refrigerator, Ford’s F-150s, Hubble’s camera, Samsung’s vacuum cleaners, and Coway’s air purifiers, to name a few. Alexa has some stiff competition with Google jumping into the virtual assistant war last year. But why is Alexa so successful? Ben Thompson from stratechery explains by examining the success of Microsoft, Google, and Facebook’s operating system:

Summary:

- Operating systems (OS) manage the computer’s resources to allow software applications to run. According to Thompson, this role makes the OS attractive to tech companies since it: 1) reduces the plane of competition for hardware providers to performance, 2) creates network effects (more users lead to more applications for the OS), and 3) allows companies to own user relationships.

- Microsoft Windows: Windows computers capture this idea perfectly. Massive competition amongst hardware providers led to cheaper and better components. The demand from IBM helped set Windows as the default OS for enterprise PCs. Finally, the Windows ecosystem gave birth to the Office franchise and other Windows Server line of products.

- Mobile OS: Microsoft lost the mobile market to Google’s Android and Apple’s iOS. Unlike Windows though, Android could not replicate the dominance of Microsoft in the PC market by the virtue of being free. Apple, being a hardware company that used OS as its differentiator, was inherently blind to the risk of building hardware. This led to the duopoly with Android in the mobile OS market.

- Internet: Since Google owns the search market, Google in effect owned the OS of the Internet (even more significant than owning the browser market with Chrome). Websites became the hardware provider equivalent, forced to comply with Google’s PageRank algorithms to be more visible. With more websites and user data, Google introduced a new business model for OS: advertising.

- Facebook: If Google made its fortune on top of Windows, the duopoly of iOS and Android led to Facebook being the chokepoint on the app market. Facebook dictates the social interactions of nearly two billion people through its platform (Instagram and Messenger included).

- Alexa/Virtual Assistant: The failure of the Fire Phone illuminated Amazon’s “deeply rooted culture of modularity and services.” Alexa created a new market, a voice-based personal assistant in the home, leveraging Amazon’s massive cloud infrastructure. At home, smart phones were always plugged in to recharge, giving way for Alexa to seize the opportunity to build a smart home ecosystem based on voice.

Takeaway:

The tech giants have owned the consumer space by building an OS and dominating the market. Amazon is building an OS for the home. Hardware companies (as demonstrated at CES 2017) are competing to integrate Alexa within its products. More Alexa-enabled devices means more Prime customers in the future. Amazon doesn’t need to make money on Alexa initially: it’s a gateway for Amazon to become THE logistic provider of the future.

You can read the original post by Ben Thompson here.

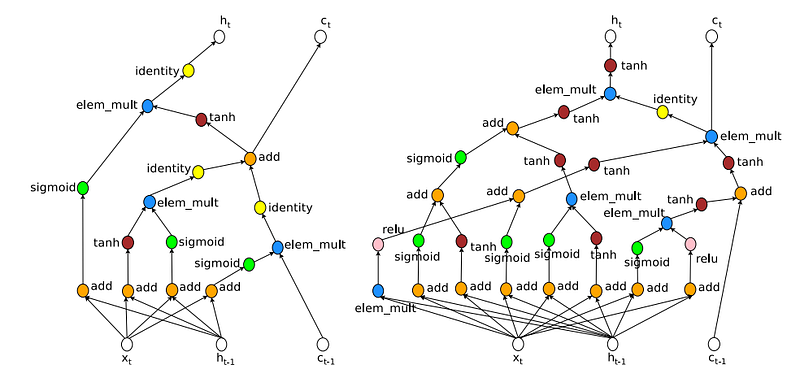

Computer Generated Neural Architectures

Google had quite the year in machine learning (2016 in Review: IoT, Machine Learning, AI & Automation) with AlphaGo and much improved Google translate. The Google Brain team is already off to a great start with its new paper: “Neural Architecture Search with Reinforcement Learning” submitted to ICLR 2017. Despite the success of deep learning, crafting a successful neural network is still a difficult task. Barret Zoph and Quoc Le used recurrent neural networks to train the computer to optimize for the best neural network via reinforcement learning. Their results on the CIFAR-10 and Penn Treebank dataset demonstrate the potential of a generalized method to design neural networks in the future.

Summary:

- The recent success of deep neural networks in natural language processing and computer vision fields have brought a paradigm shift from fine tuning features to carefully designing neural architectures. Today, designing architectures require expert knowledge and lengthy trial-and-error type experimentation.

- The key observation made by Zoph and Le is that “structure and connectivity of a neural network can be typically specified by a variable-length string.” This allows RNNs to generate these strings, use accuracy on validation sets as the reward signal, and use reinforcement learning to improve the model.

- On image recognition tasks on the CIFAR-10 dataset, Neural Architecture Search generated a model that achieved 3.84 test set error while being 1.2x faster than the current best model. On language modeling using the Penn Treebank, Google Brain’s model achieved test set perplexity of 62.4, performing 3.6 perplexity better than current state-of-the-art systems.

Takeaway:

The proposed method in the paper uses a recurrent network controller to generate neural architectures. Then the network is trained with a policy gradient method, maximizing the accuracy of the generated architecture. As far as I know, this is a novel method for searching for the optimal neural net architectures. The training time required to find this optimal network is quite long and generality of this method is in question, despite the confidence of the paper’s authors. As more experiments roll in to verify its potential, this has the makings of reaching a significance that AlexNet had in CNNs.

You can read the original submission here.

Quote of the Week

This week, instead of focusing on a specific quote, we are listing some of the most impactful advice collected by First Round Review. Number 20 on the list, “Fuse Engineering, Product and Design from the start,” was featured on our very first Last Week in the Future. We have compiled some of the lessons that resonated with us here at Leverege. You can also read the full list at First Round Review.

9. Hire for CX before you have customers

“Success is measured in happy customers, not product releases.”

This rings especially true in IoT, where the entire industry suffers from fragmentation, interoperability difficulties, and insufficient support. The best companies we’ve worked with all had excellent support. They could empathize with software issues, even though their expertise was in hardware. IoT customers increasingly expect out-of-the-box solutions. And it makes them happy if someone is willing to help them get there.

13. Beat your competition by introducing unfamiliarity — a better mousetrap won’t work

“The bottom-line is you have to build a lens to allow users to see a new world rather than features to help them see an old world better.”

This was a lesson learned when we participated in a hackathon. To improve prescription adherence, we created a chatbot to remind patients to take their medicine on time. While a voice-interface with the Alexa and sending text-based reminders were nice, this was a case of building a better mousetrap. It didn’t fundamentally change the problem at hand.

21. Write down any question you hear from customers more than twice. That’ll feed your content marketing.

“Good content marketing requires finding product-market fit all over again.”

We began our newsletter “IoT For All” (iot-for-all.com) because we saw a disconnect in the IoT world. Devices and services were designed by engineers for engineers. For a technology with a goal of creating a connected world, it was missing the human connection. Our #AskIoT series explains IoT concepts in simple, non-technical terms. We also share guides and other resources for IoT. Our customers frequently asked us to explain LPWAN and cellular IoT in layman’s terms. We listened, and “IoT For All” was born.

28. Invest in data science only after you’ve validated your MVP.

“Data science requires data to science, and most companies don’t have much data on day one.”

Everyone is scrambling to reap the benefits of data science. Big data and machine learning are undoubtedly powerful in building better products. But the first thing in doing data science is getting the data. As the Instacart VP Data Science Jeremy Stanley explains, not everyone has to build a data science team from day one. Get the data first, see if the data will drive action, and then pursue a data science strategy.

Rundown & Resources

- Self-driving MarioKart — TensorKart

- Data Visualization Catalogue — DVC

- What I Learned Implementing a Classifier from Scratch — Jean-Nicholas Hould

- Apple’s First AI Paper — arXiv

- RNN Tutorial for Artists — otoro

The Most Comprehensive IoT Newsletter for Enterprises

Showcasing the highest-quality content, resources, news, and insights from the world of the Internet of Things. Subscribe to remain informed and up-to-date.

New Podcast Episode

IoT in 2026: Trends and Predictions

Related Articles