DeepMind’s One-Shot Learning, Object Recognition with Radar, and Election Fallout on Tech

DeepMind’s One-Shot Learning, Object Recognition with Radar, and Election Fallout on Tech

- Last Updated: December 2, 2024

Yitaek Hwang

- Last Updated: December 2, 2024

DeepMind Does It Again

Artificial Intelligence has yet to surpass the human brain in terms of training time: even the best algorithms require huge datasets that train carefully-tuned models over a long period of time. Earlier this week, a team at Google’s DeepMind led by Oriol Vinyals introduced a new technique to allow one-shot learning: the ability to recognize objects in images from a single example. Now deep learning can be trained on a small dataset with DeepMind’s neural network architecture that adds a memory component.

Summary:

- Will Knight from MIT Technology Review explains that probabilistic programming techniques that model one-shot learning systems exist but are not compatible with deep learning architectures.

- DeepMind’s new approach combines the high accuracy of deep learning with fast training times on smaller datasets. The system still needs several hundred labeled datasets (compared to thousands), but the ability to recognize new objects given a single example is astonishing.

- This finding is different than the differential neural computer that another group at DeepMind published earlier last month (see LWITF v5.0). Multiple approaches embedding memory into deep learning signals a new trend in AI systems.

Takeaway: Deep learning — and more generally neural networks — still cannot fully emulate human learning. However, DeepMind’s new one-shot learning system that adds memory mechanisms to deep learning pushes the fields of computer vision and artificial intelligence bit closer to human capabilities. Also, the ability to achieve high accuracy with smaller datasets gives more companies without access or infrastructure to handle big data an opportunity to reap the benefits of deep learning.

+DeepMind and Blizzard team up to use StarCraft II as an AI research environment

Object Recognition with Radars

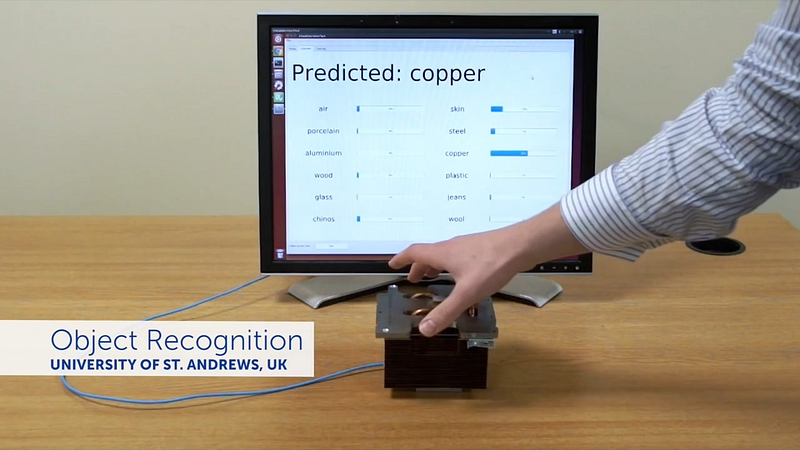

Convolutional neural networks have been the talk of image recognition in recent years. While the computer vision community focused on deep learning and convolutional neural networks, Google introduced Soli radars last year. Project Soli utilizes radar technology to interpret small movements such as hand gestures with sensors. Now a team from the University of St. Andrews is incorporating machine learning to Soli radars to recognize and classify materials and objects. Named RadarCat, the demo video illustrates potential Applications and future works in progress for this amazing technology.

Summary:

- RadarCat (Radar Categorization for Input and Interaction) sends radar signals and uses machine learning to recognize objects. It can also predict materials to distinguish between plastic cups from porcelain ones.

- The creators of RadarCat see it opening new opportunities in navigation for blind users, improving consumer interaction, facilitating industrial automation such as automatic recycling and laboratory process control.

- Beyond the Applications outlined by its creators, RadarCat technology can have huge impacts on warehouse automation (e.g. Amazon robots), defense (e.g. drone detection), and quality assurance.

Takeaway: Radars and cameras have their own advantages and disadvantages. Traditionally, radar has been superior at measuring range and relative speed, whereas cameras excel at object recognition and measuring lateral movement. Now, RadarCat’s ability to recognize objects can make smart devices more robust. It can be used in tandem with cameras to sense and identify objects. For example, it can be used on self-driving cars at night to complement cameras. This is an early technology, but an exciting one with lots of potential.

Quote of the Week

“First and foremost, the vote [on Tuesday] registered a strong concern about the plight of those who feel left out and left behind.

In important respects, this concern is understandable. In recent months we’ve been struck by a study from Georgetown University. It shows that a quarter-century of U.S. economic growth under Democrats and Republicans alike has added 35 million net new jobs. But the number of jobs held by Americans with only a high school diploma or less has fallen by 7.3 million. The disparity is striking. The country has experienced a doubling of jobs for Americans with a four-year college degree, while the number of jobs for those with a high school diploma or less has fallen by 13 percent.

- Brad Smith, President and Chief Legal Officer at Microsoft

It is impossible to overlook the election this past week. Aside from the politics and the ongoing conversations about the ramifications of this election, there are two major headlines from a tech standpoint: 1) failure of data analytics and flawed polling methods, and 2) the growing division that the tech industry is causing. As I write this newsletter in Knoxville, Tennessee today, I want to bring to attention the role technology plays in roughly half of the Americans and what the tech industry must do to resolve their anger.

Self-driving cars are coming — or to a degree, already here. Artificial intelligence and machine learning are pushing the boundaries of automation. Most importantly, while there is a growing shortage of developers with the explosive growth of IoT, the opportunities it creates will not be available for all, especially the ones who spoke out at the election. We have to talk about its impact and start formulating solutions.

Microsoft has vowed to “innovate to promote inclusive economic growth that helps everyone move forward.” But for all of us interacting with technology in some form or another, how can we work towards this goal? Leave us your thoughts below in the comment section!

The Rundown

- TensorFlow & Deep Learning without a PhD — YouTube

- Free e-books for developers — DevFreeBooks

- Random forests in Python — YhatBlog

- Artificial Pancreas — Scientific American

Resources

- Deck.GL: Uber’s WebGl-powered framework for visualizing datasets at scale

- Mest.io: Talk to a stranger who has different opinions than you

- Hyper.sh: Effortless Docker hosting

- Explosion.ai: Custom AI and NLP solutions

The Most Comprehensive IoT Newsletter for Enterprises

Showcasing the highest-quality content, resources, news, and insights from the world of the Internet of Things. Subscribe to remain informed and up-to-date.

New Podcast Episode

How Drones and Telecom Enable Aerial IoT

Related Articles