How to Use Edge Orchestrators to Deploy Artificial Intelligence at Scale

How to Use Edge Orchestrators to Deploy Artificial Intelligence at Scale

- Last Updated: December 2, 2024

Barbara

- Last Updated: December 2, 2024

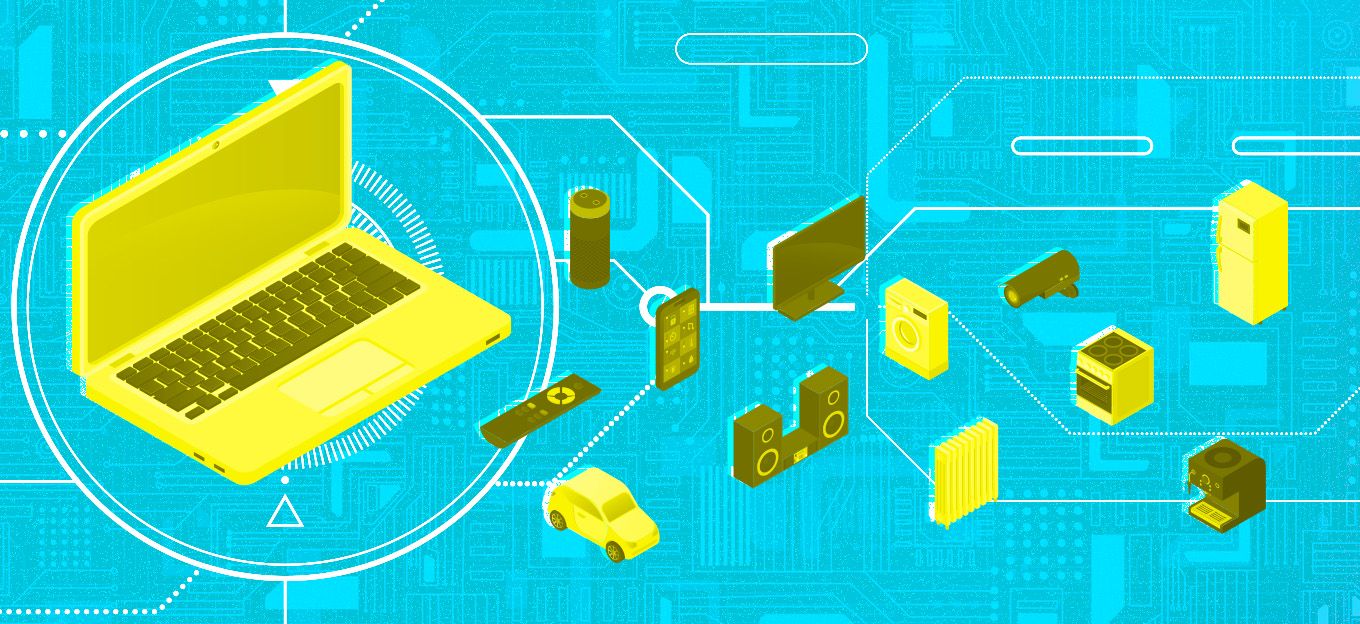

Many industries are increasingly demanding greater speed and autonomy in decision-making to adapt to increasingly volatile markets. In addition, industrial devices generate an increasing amount of data from more diverse, geographically dispersed devices and with greater frequency. This need to capture more data more frequently and make decisions on the fly has led to an increase in the computing capacity required near the place where the data is generated, i.e., the Edge and the development of distributed Artificial Intelligence, also called Intelligence at the Edge or Edge AI.

Edge AI's main advantages are better latencies, optimization of communication bandwidth, reduction of Cloud service costs, and an improved level of security, which is very important in specific critical industries.

Edge Orchestrators will enable models to respond collaboratively through intelligent networks of nodes, and these capabilities will open the door to Industry 5.0.

For all these reasons, the industrial sector is adopting Edge Computing. Analysts such as Gartner, IDC, and Grand View Research are forecasting annual growth of more than 30%. Traditional cloud computing service providers are beginning to position themselves to offer infrastructure that enables this Edge Computing model.

Edge Orchestrators and Intelligence Management

Artificial Intelligence is based on the execution of multiple complex algorithms that allow machines to make decisions without human intervention, simply from the data they capture and process. Many of these algorithms can be run close to the source of that data, i.e., at the Edge, as Machine Learning and Artificial Intelligence, in general, are well suited to a distributed intelligence operating model.

However, running AI on end devices can be challenging, as they often have limited processing capabilities. Therefore, the need arises to use powerful devices or efficiently manage their hardware resources through lightweight software that allows the execution of these algorithms without overloading the system. This is especially important if we consider that there will be several algorithms running in parallel on many occasions, each of them possibly with different origins and authors and based on various technologies.

On the other hand, these algorithms often use many data analytics and machine learning libraries that must be available in the system not to cause compatibility issues. In addition, these algorithms represent the core business of many companies, so it is vital to protect that intellectual property, typically by obfuscating or encrypting the algorithms both in transit and when they are running on the Edge Nodes. These algorithms often evolve, so it is also necessary to manage their versions centralized.

Some companies are starting to offer so-called Edge Application Orchestrators to solve all these problems or Edge Orchestrators.

Most of these platforms rely on an increasingly widespread practice in Edge Computing: virtualization through Docker containers. These tools allow them to manage applications and algorithms running on the Edge remotely, using different strategies for efficient resource allocation. These strategies lead to efficient and secure execution of tasks, abstracting the user from all the underlying complexity.

Edge Orchestrator Use Cases

The sectors where Edge Orchestrators and Edge Computing in general can have the greatest impact are those that deal with a high volume of connected devices. Furthermore, the impact is exponentially greater when these devices are dispersed geographically and generate data at high frequencies.

One industry where we see this trend materialising is the electricity sector, with transformer substations being one of the clearest examples.

Medium- to low-voltage electricity transformation centers are the infrastructures responsible for adapting electrical energy to be consumed by citizens in their homes. They are part of the distribution network, and there are hundreds of thousands of them in a country the size of Spain.

These transformation centers have a series of industrial equipment whose digitization through Artificial Intelligence and Edge Computing technologies can predict and anticipate demand or detect potential failures even before they occur. This information can be invaluable for both site operators and manufacturers —or even end users.

Using an Edge Orchestrator in such an application makes perfect sense, as it simplifies the deployment of the algorithms, speeds up the time-to-market of the solution, and offers a smooth and frictionless debugging and maintenance cycle. Edge Orchestrators allow companies to focus on what should be their primary concern: conceiving those algorithms and using them to operate the business.

Another case where an Edge Orchestrator could be used is in distributed manufacturing, where the product is produced in a network of several geographically-dispersed facilities. Coordination between the different centers is usually done through dedicated systems, generally in the Cloud.

However, the use of collaborative algorithms at the Edge (with the centers agreeing among themselves, instead of being directed by someone from a higher hierarchical level) can optimise investment, while also improving data security and facilitating compliance with industry regulations, regulations that sometimes do not fit in very well with Cloud technologies.

The Future of Orchestrators and the Industry

Undoubtedly, the industry is moving towards a computing paradigm capable of distributing and subordinating real-time decision-making to whatever their nodes think. Edge Orchestrators enable this decision-making process, facilitating the execution of increasingly complex Machine Learning models in a parallel and distributed manner. These cognitive machines will allow us to overcome some of the barriers we currently face, such as achieving low latency in the read-think-act cycle and integrating data and decisions from multiple Edge Nodes.

Furthermore, Edge Orchestrators will enable models to respond collaboratively through intelligent networks of nodes. These capabilities will open the door to Industry 5.0, a dramatic transformation that will put humans back at the center of industry, creating intelligent spaces where humans will communicate seamlessly with these intelligent networks of nodes.

The Most Comprehensive IoT Newsletter for Enterprises

Showcasing the highest-quality content, resources, news, and insights from the world of the Internet of Things. Subscribe to remain informed and up-to-date.

New Podcast Episode

IoT in 2026: Trends and Predictions

Related Articles