How AI Is Quietly Transforming Site Reliability Engineering

How AI Is Quietly Transforming Site Reliability Engineering

- Last Updated: February 18, 2026

Baskar

- Last Updated: February 18, 2026

Artificial intelligence (AI) is silently reshaping how site reliability engineering (SRE) operates. Instead of people reacting after something breaks, AI-powered systems now detect issues before they occur. As applications become increasingly intertwined, machines handle what humans can’t, such as continuously monitoring countless metrics simultaneously. When problems arise, intelligent software responds faster and adapts more effectively than any single expert could.

Transitioning from Reactive to Proactive Reliability Engineering

Most SRE routines lean on fixed limits and human tuning. This approach tends to stall or misstep in complex environments. Instead of waiting for crises, advanced AI tools allow teams to intervene before problems occur. Algorithms spot subtle shifts that signal trouble by analyzing past data points, whether logs, traces, or performance metrics.

Outliers are no longer flagged by rigid rules based on fixed numbers pulled from records. Because of this shift, alert quality improves, reducing the number of engineers needed to filter through noise. This is especially valuable during periods of fluctuation, such as market open hours or daily traffic peaks—patterns that swing without warning. These variations fade into the background rather than triggering red flags. As a result, engineers receive alerts that clearly indicate where risk hides.

Intelligent systems now help with root cause analysis and contain basic self-healing features. When incidents occur, preapproved repair actions execute automatically, guided by fixed limits to preserve system stability, accelerate recovery, and maintain control. At the same time, SRE teams require different and evolving capabilities, including data interpretation, system behavior monitoring, and AI-assisted observability.

Routine SRE Workflow Automation

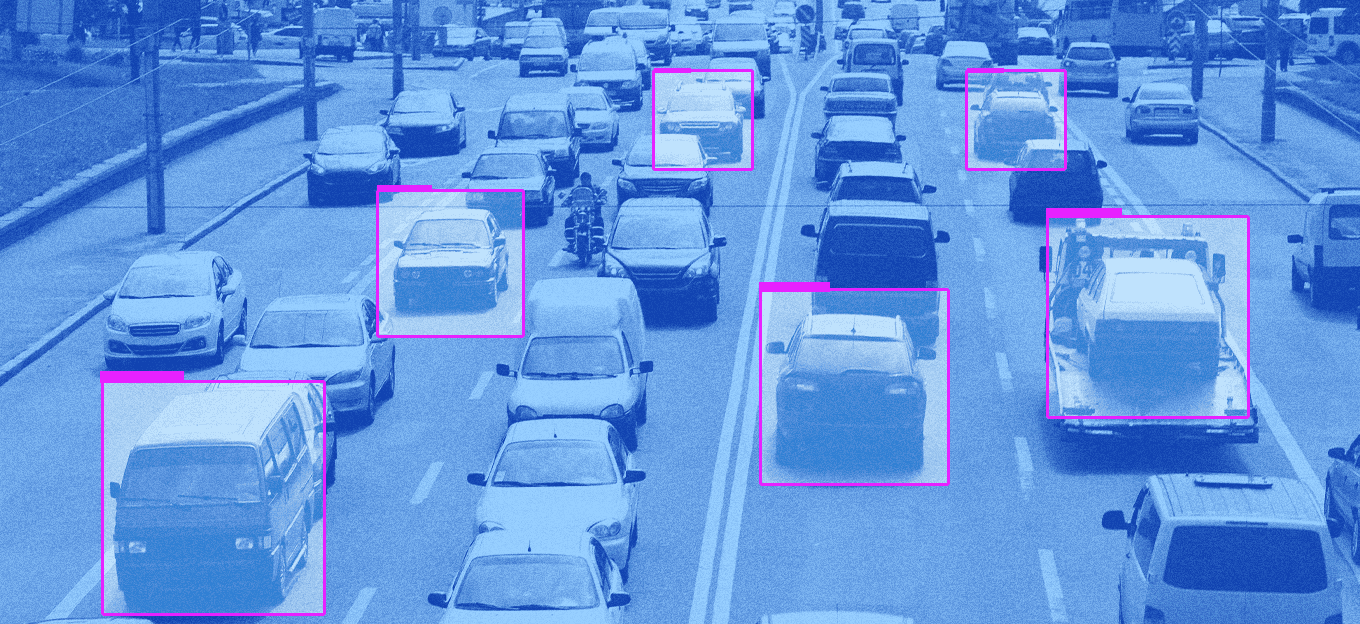

When speed is critical, AI handles fast, data-intensive SRE workflows more effectively than humans, an area increasingly supported by AIOps frameworks that embed automation throughout IT operations. Tasks such as monitoring system logs, detecting anomalous behavior, linking alerts across apps and servers, quickly sorting issues, anticipating demand shifts, and performing system repairs are often good candidates for automation.

With these capabilities, teams identify issues sooner and react faster. Mean time to detect (MTTD) decreases as fixes occur more quickly. By correlating alerts across varied systems, AI reveals real solutions when trouble hits. That clarity helps engineers and reliability experts resolve root causes without delay.

Enhancing Incident Response with AI Tools

When systems operate normally, AI continuously detects patterns from live feedback. Instead of enforcing fixed limits, it shapes understanding around shifting norms over time. Because real-world workloads change constantly, rigid rules often miss subtle changes. By adjusting what feels typical, systems spot actual disruptions faster than before.

The AI-created runbook is a central element of modern incident response. This software takes live incident details, including alerts, recent deployments, or impacted services, and compares them to past behavior to suggest possible underlying issues. Instead of relying on manual steps, runbooks align key inquiry routes and provide instant fix tools, all built with safeguards to keep actions safe in live systems.

Runbooks adapt during crises, learning from every mishap to sharpen what comes next. Over time, response becomes faster and more consistent, reducing operational friction.

Managing Complex Distributed Environments

When companies operate across cloud, local, or shared environments, managing resources becomes more complex. Machine learning (ML) tools improve forecasting accuracy by weighing factors such as business cycles, city flows, weather shifts, and yearly rhythms.

When usage shifts unexpectedly, intelligent scaling adjusts resources in real time, avoiding abundant or inadequate capacity. By spotting trouble early, AI highlights vulnerable hardware or software components, so repairs happen before breakdowns stop systems. These features enable worldwide oversight of resources across regions, zones, and setups, whether cloud-based or older systems, to help teams scale and stay resilient.

Organizational Readiness and Measurable Outcomes

Introducing AI into SRE workflows requires clear ownership and accountability. Automation lives inside SRE teams, yet the tools they run on come from elsewhere. Effective implementation depends on cross-functional collaboration: leaders provide direction, engineers build frameworks, data experts shape models, and product leads define business objectives.

Metrics demonstrate that using AI in SRE works. Teams can evaluate success by tracking metrics such as incident frequency and severity, improvements in detection and recovery times, progress in preventing problems before they occur, and long-term improvements in system reliability.

Analyzing these metrics provides a clear picture of how reliable, efficient, and automated a system really is. Sustained effectiveness depends less on technology and more on culture and ongoing learning. Teams require proper training to understand AI frameworks effectively. Quality data collection matters just as much as setting clear limits on where automation can go safely.

Looking Ahead: From Assistance to Autonomy

The future of AI-driven SRE includes deeper automation and self-driving systems that run independently. New tools are emerging, including generative runbooks, automated remediation scripts, infrastructure-as-code generation, and auto-summarized error reports. As systems grow tangled, the ability to correlate patterns across layers, such as services, zones, and clouds, will become increasingly critical to success.

Still, progress creates fresh challenges. Safety and confidence keep matters in check, as automated steps should not introduce additional risks. It’s critical for data accuracy and clear visibility to hold steady to ensure reliable AI performance. For SRE teams, closing skill gaps and building confidence in AI-powered operations are as important as deploying new tools.

What once required constant manual effort now falls within AI’s domain, handling repetitive tasks with speed and precision. Instead of duplicating work, these tools dive into tangled data patterns, areas where people inevitably stall. Engineers still lead design decisions but gain sharper clarity when it matters most. Reliability improves without adding layers of oversight. Scalability finds footing where overload used to settle, and resilience strengthens in structures that learn from each incident. Stability becomes less of a surprise and more of an expected outcome. Those embracing this blend, not with fear but with active partnership, will discover fewer cracks spreading through layered systems.

Baskar Chinnaiah is the director and site reliability engineer for a global financial services firm. With more than two decades of IT experience, he specializes in ensuring the high availability, performance, and scalability of enterprise cloud systems, reliability roadmaps, leading incident response, fostering automation, and cultivating a culture of observability excellence across Fortune 500 organizations in the finance and network products industries. Chinnaiah holds a master’s degree in computer applications and a bachelor’s degree in computer science from Bharathidasan University. Connect with him on LinkedIn.

The Most Comprehensive IoT Newsletter for Enterprises

Showcasing the highest-quality content, resources, news, and insights from the world of the Internet of Things. Subscribe to remain informed and up-to-date.

New Podcast Episode

IoT in 2026: Trends and Predictions

Related Articles